Introducing – Templates

24 Dec 2019

Create DB Snapshots Now Supports Clusters

23 Jan 2020

AWS has been doing some great work lately to reduce Lambda cold start times for all its Lambda runtimes, such as their VPC networking improvements and adding native support for automatically keeping your Lambda containers warm.

These changes have reduced the number of things that a developer needs to do to improve their Lambda start-up performance.

But there is still one variable under the control of the developer that has a significant effect on cold starts – the packaged bundle size.

Why bundle size still matters

Despite the improvements that AWS has made, your Lambda can still cold start. This extra startup time will impact the perceived responsiveness of user-facing functions, such as APIs or Slack applications.

If you’re using the serverless framework with JavaScript, it packages your code with all the npm dependencies by default. This can create very bloated Lambda functions. Much of this code is often unused. Since this code is in your application package, it still needs to be copied and extracted onto the file system every time a container is provisioned. The result is a slow response time for your customer.

Where back-end developers need to catch up

The packaged code size has long been a metric of importance to web developers. They spent hours optimizing the JavaScript, images, CSS, and HTML delivered to a web application.. The amount of time taken for it to download affects the time to first paint and time to first interaction. Plus, it can have real impacts on customer retention and conversion.

Back-end developers have been able to get away with ignoring their code size for a long time because of the way it was packaged and executed. When you deploy a monolithic application, you typically deploy it to a long-running server. Once started and left running for days since start-up times were not really a concern.

Such applications are typically clustered to horizontally scale for performance and elastically adjust capacity for changing customer load. As start-up times are already poor, clusters are normally overprovisioned to compensate. Developers have been able to hide their poor application start-up times behind this.

With serverless, the architectural concerns of the front-end come to the back-end. Even though, it has freed developers from thinking about a number of operational concerns like infrastructure provision and resource optimization. Now, they need to consider things like cold starts and resource connection management that weren’t needed in the past.

Bundle size directly affects the start-up time of a Lambda. So, optimizing the amount of code that is deployed with a bundler will help reduce cold start time.

Thankfully for JavaScript developers, the tooling that has been used to bundle code is quite mature on the front-end. There are now many bundlers for JavaScript, and most of them are suitable for packaging back-end code running on Node.js as well.

How does a bundler (serverless webpack) help?

Bundlers work by looking at the imports and exports in your code and your referenced npm packages, building a dependency tree, and then generating a single JavaScript file that contains all the code rolled up together.

This brings a number of benefits:

- Include only the code used by your function.

You may have multiple Lambda functions in the same serverless project that share some code. But, it is unlikely that every function uses exactly the same set of shared dependencies.

It is possible to optimize your deployed Lambda size by only including the code that is imported by your function, not all your functions. - Optimize your npm dependencies

You may only be using some of your npm dependencies, or even only part of the code of those referenced. Some of your npm dependencies may exist twice because they are referenced by multiple modules.

A bundler can follow the dependency graph into a npm module. Then, grab only those files that are transitively referenced by your code, as well as de-duplicating (where compatible versions exist). - Use a single file for your source code

Using a single file instead of multiple files can reduce the amount of file system accesses needed by Node.js to load your code.

(This improvement is usually small. Besides, it’s a side-effect of webpack where packaging a single file for the web makes a huge difference over the network.)

The result is usually a much smaller and efficient Lambda function than if you had just zipped up all your code and deployed it.

Getting started with serverless-webpack

The first step is to install the plugin and register it in your serverless file.

Change to your project directory and install the serverless-webpack npm module, along with webpack:

npm i -D serverless-webpack webpack

Add the plugin to your serverless.yaml file:

plugins: - serverless-webpack ... # if you have serverless-offline-plugin, make sure it comes after serverless-webpack - serverless-offline-plugin

Last, add a webpack.config.js file in the root directory:

const slsw = require('serverless-webpack');

module.exports = {

target: 'node',

entry: slsw.lib.entries,

mode: slsw.lib.webpack.isLocal ? 'development' : 'production',

node: false,

optimization: {

minimize: false,

},

devtool: 'inline-cheap-module-source-map',

};At this point, we have configured the serverless-webpack plugin and given it a minimal webpack configuration.

If you have been reading the serverless-webpack documentation, you may have noticed I’ve included some extra options that don’t appear in their examples.

node: falseis unusual, but I’ve found it to be necessary for my configurations.Setting

nodeas the target is normally enough to get webpack to compile for Node.js — it uses CommonJSrequire()for dependencies and ensures that built-in dependencies are not substituted with stubs. However, I’ve found it still interferes with theprocess.envglobal, which is needed to use Lambda environment variables.minimize: falseturns off code minimization (uglification).

Obfuscation makes it harder to read stack traces and reduces bundle size a bit. It is not enough to justify doing it for a server application. Moreover, it isn’t really needed where code isn’t distributed directly to users (like over the web).My configuration disables minimization, because it greatly reduces the amount of CPU and memory needed at build time and helps

avoid ‘out-of-memory’ errors (see the Troubleshooting section below).

Package once, or once per function

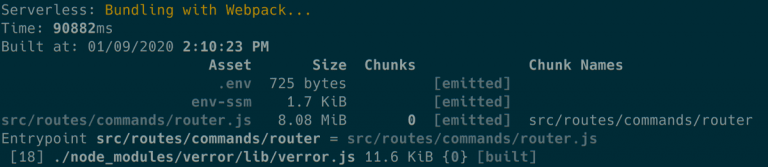

If you run serverless package at this point, your serverless output will change to include a typical webpack report. Thus, showing build times and bundle sizes.

By default, serverless generates one package with all your code and deploys it for all your Lambda functions. serverless-webpack does the same, but it creates one JavaScript file per Lambda function instead, with all the code needed by each Lambda, including npm dependencies, bundled up together and then zipped.

NOTE: it doesn’t include non-source code files in the bundle – for these see Copying other files into the bundle

You can find your packaged source bundle in the <project>/.serverless directory. You will find that this is still a huge reduction in size compared to the serverless default for non-trivial applications.

If you want to optimize further and create a bundle specific to each Lambda function, you can make serverless package each one individually by setting the following option in serverless.yaml:

--- package: individually: true

serverless-webpack utilizes this value too, and it will create a separate deployment package for each Lambda with the single webpack bundle in each one. This takes much longer, but the result is a more optimized output.

Using babel

The plugin documentation outlines how to use babel for transpilation (but I’ve included some of my own changes to ensure you get the most of out it).

Firstly, install the babel-loader and related babel npm packages:

npm i -D @babel/core @babel/preset-env babel-loader corejs@3

Add the following entry to your webpack.config.js (making sure to set the node target to the Node.js runtime version you are using on Lambda):

module.exports = {

...

module: {

rules: [

{

test: /.js$/,

exclude: /node_modules/,

use: [

{

loader: 'babel-loader',

options: {

presets: [

[

'@babel/preset-env',

{ targets: { node: '12' }, useBuiltIns: 'usage', corejs: 3 }

]

]

}

}

]

}

]

},

...

}Compared to the serverless-webpack documentation, the main differences with my configuration are:

- adding the

@babel/preset-envpreset to ensure that we don’t include transformations or polyfills for features that are already available in Node.js. - set

useBuiltins: 'usage'so that any polyfills that are used are included on a case-by-case basis - set

corejs: 3so that the old, buggy core-js v2 is not used to provide polyfills

Copying other files into the bundle

Because serverless normally copies everything into the deployment package, you may get a rude shock when your code suddenly stops being able to load arbitrary non-code files from the file system in your Lambda function.

As mentioned before, serverless-webpack only includes the source code bundle, effectively ignoring whatever is set in the include and exclude options under the package section of serverless.yaml. You must configure webpack to copy the non-source files you need using the copy-webpack-plugin

Install it first with npm:

npm i copy-webpack-plugin

In your webpack.config.js include and configure the plugin with the file paths and globs you wish to copy:

const CopyPlugin = require('copy-webpack-plugin`);

...

module.exports = {

...

plugins: [

new CopyPlugin([

'path/to/specific/file',

'recursive/directory/**',

]),

],

...

};Troubleshooting

Out of memory

Using webpack to package your code uses far more CPU and memory than normal. Besides, it’s not unusual for Node.js to report an out-of-memory error. serverless-webpack runs a webpack instance per-function, and each one has to combine and minimize the output.

Serverless: Bundling with Webpack...

<--- Last few GCs --->

[8233:0x393fa70] 207373 ms: Scavenge 1885.9 (2042.4) -> 1885.9 (2042.9) MB, 9.3 / 0.0 ms (average mu = 0.262, current mu = 0.159) allocation failure

[8233:0x393fa70] 207386 ms: Scavenge 1886.4 (2042.9) -> 1886.4 (2043.6) MB, 11.4 / 0.0 ms (average mu = 0.262, current mu = 0.159) allocation failure

[8233:0x393fa70] 207404 ms: Scavenge 1887.0 (2043.6) -> 1886.9 (2044.1) MB, 15.8 / 0.0 ms (average mu = 0.262, current mu = 0.159) allocation failure

<--- JS stacktrace --->

==== JS stack trace =========================================

0: ExitFrame [pc: 0x1374fd9]

1: StubFrame [pc: 0x13afd14]

Security context: 0x39352fbc08a1 <JSObject>

2: replace [0x39352fbccf51](this=0x2c60c4e7f591 <String[#27]: n//# sourceMappingURL=[url]>,0x16535c280e41 <JSRegExp <String[#7]: [url]>>,0x16535c280e79 <JSFunction (sfi = 0x35c9e77a48b9)>)

3: /* anonymous */(aka /* anonymous */) [0x17c38da35a9] [/home/chris/dev/slack-app/bot/node_modules/webpack/lib/SourceMapDevToolPlu...

FATAL ERROR: Ineffective mark-compacts near heap limit Allocation failed - JavaScript heap out of memory

1: 0x9da7c0 node::Abort() [node]

2: 0x9db976 node::OnFatalError(char const*, char const*) [node]

3: 0xb39f1e v8::Utils::ReportOOMFailure(v8::internal::Isolate*, char const*, bool) [node]

4: 0xb3a299 v8::internal::V8::FatalProcessOutOfMemory(v8::internal::Isolate*, char const*, bool) [node]

5: 0xce5635 [node]

6: 0xce5cc6 v8::internal::Heap::RecomputeLimits(v8::internal::GarbageCollector) [node]

7: 0xcf1b5a v8::internal::Heap::PerformGarbageCollection(v8::internal::GarbageCollector, v8::GCCallbackFlags) [node]

8: 0xcf2a65 v8::internal::Heap::CollectGarbage(v8::internal::AllocationSpace, v8::internal::GarbageCollectionReason, v8::GCCallbackFlags) [node]

9: 0xcf5478 v8::internal::Heap::AllocateRawWithRetryOrFail(int, v8::internal::AllocationType, v8::internal::AllocationAlignment) [node]

10: 0xcc30c6 v8::internal::Factory::NewRawOneByteString(int, v8::internal::AllocationType) [node]

11: 0x103253a v8::internal::Runtime_StringBuilderConcat(int, unsigned long*, v8::internal::Isolate*) [node]

12: 0x1374fd9 [node]The solution is to increase the amount of heap space available to Node.js when starting serverless (this only works in Node.js v8 and later):

node --max-old-space-size=4096 node_modules/.bin/serverless package --stage dev ...

(Also note that we have to invoke serverless with its full path in the `nodemodules/bin` folder because_ we are starting Node.js directly.)

If you don’t want to call Node.js directly, you can set the node options using an environment variable:

export NODE_OPTIONS=--max-old-space-size=4096 npx serverless package

Correct line number and function names in stack traces

Because webpack transforms your code and packages it into the same file, stack traces in CloudWatch logs will reflect what is packaged by default. This can hamper your ability to debug your code.

webpack uses the devtool option to control source maps, which are extra comments included in the code used to help debuggers and stack trace generators translate references to the bundled source code back to the transformed or original source code.

In our sample webpack.config.js, we set the devtool option to 'inline-cheap-module-source-map', which should render stack traces with line references to the original source code.

This option can affect webpack build speed, so if you want to use something else, check out the webpack documentation on devtool.